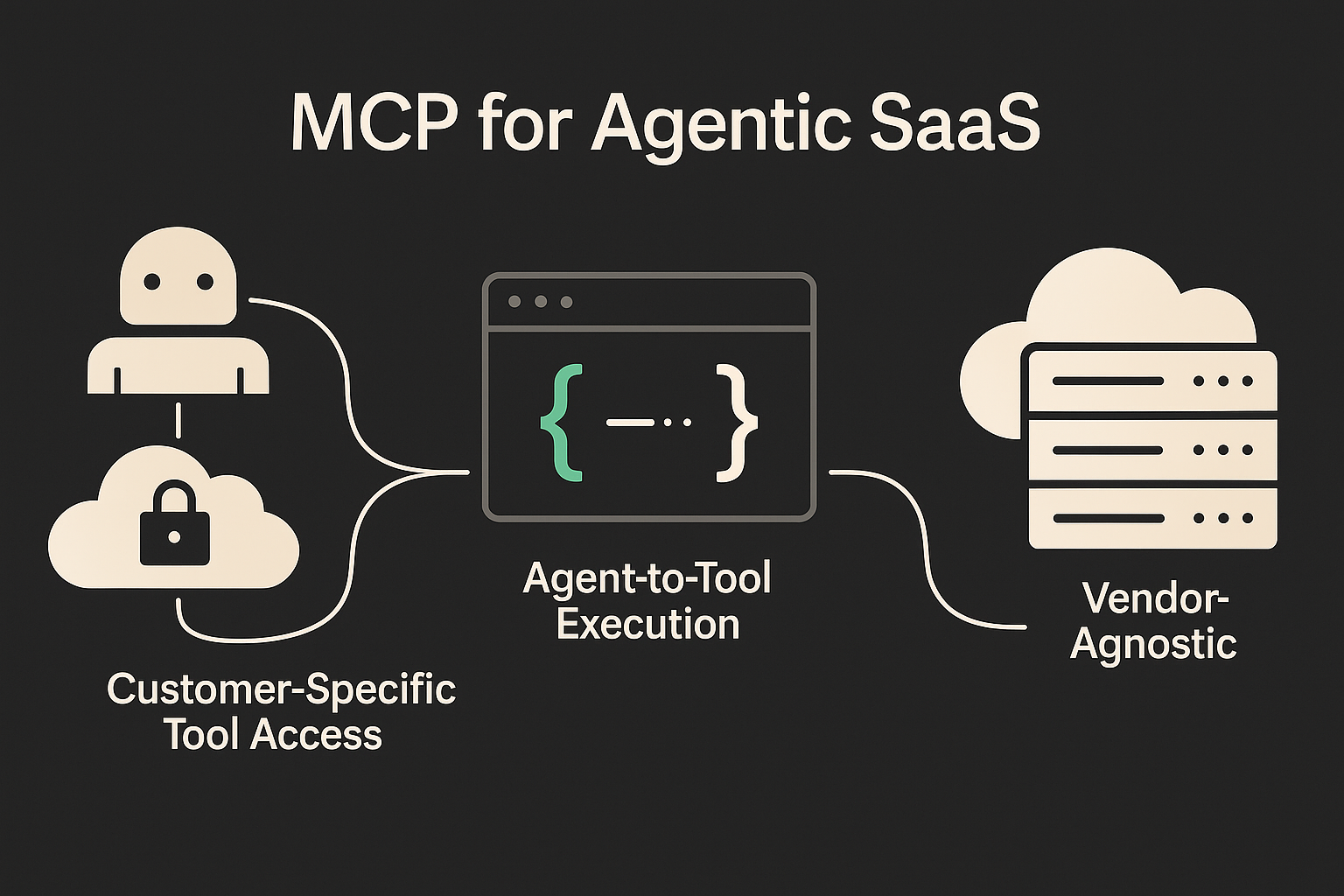

I have been asked about [Model Context Protocol](https://modelcontextprotocol.io/introduction) (MCP) recently. Here is a an attempt to clearing the fog around MCP so you stop expecting it to clean your kitchen sink. # First up, does MCP deserve this mind share? MCP is the first serious attempt at establishing a lingua franca for AI agents and external tools. It provides every agent with a common walkie-talkie channel, allowing them to request context, call actions, and stream results without needing to invent a new dialect each time. # Why MCP deserves the hype for agentic SaaS Agentic SaaS is not just a trend. It's the architecture shift driving the next generation of AI-native products. **If your platform runs AI agents that need to reason and take dynamic action**, not just generate output, you need a reliable way to move data, trigger tools, and maintain context in real time. That’s what MCP unlocks.  Here’s why it’s foundational: ## MCP can be used for customer-specific tool access SaaS platforms rarely integrate with just one system. Each customer has their own CRM, ticketing system, ERP, or internal API. MCP makes it easier for the AI Agent to route credentials, configuration, and context per tenant (using orchestration platforms like [Ampersand](https://withampersand.com)), allowing a single agent to operate across customer-specific environments, especially when paired with a remote MCP server that manages auth, config, and routing per tenant. ## A universal bridge for agent-to-tool execution In Agentic SaaS, your product often relies on agents calling multiple tools dynamically across customer environments. MCP provides agents with a consistent, vendor-neutral method for invoking tools and receiving structured responses. It speaks JSON-RPC over STDIO or Server-Sent Events, making user-facing integrations more portable. ## Made for token-level, real-time exchange Agents can stream partial results and receive tool responses in near real time. This is especially powerful in GTM use cases like lead enrichment, real-time CRM updates, and conversational sales assistants, where latency-sensitive interactions directly impact user experience and conversion rates ([MCP Transports](https://modelcontextprotocol.io/transports)). ## Minimal surface, maximum leverage The [entire schema](https://github.com/modelcontextprotocol/modelcontextprotocol/blob/main/schema/2025-03-26/schema.json) is ~350 lines. The protocol itself fits on one page. For agentic SaaS teams shipping fast, this is a feature, not a bug. It’s more like a system call than a framework; you can reason about it, wrap it, extend it, and drop it into your stack without heavy abstraction overhead. ## Vendor-agnostic by design Anyone can run an MCP server or speak the protocol. It works across clouds, agents, and programming languages. For SaaS teams, that means you can embed agent capabilities into your product without handing over control to a proprietary platform or rewriting everything when your infrastructure shifts. There’s no lock-in. ## Used in real-world agent infrastructure Forward-looking stacks like [Cursor](https://docs.cursor.com/context/model-context-protocol), [OpenAI Assistants](https://platform.openai.com/docs/assistants/overview), and [Ampersand](https://docs.withampersand.com) work with MCP to let agents talk to external tools safely and predictably. In short, MCP is becoming the I/O protocol for agentic applications. But it’s not an agent runtime, not a scheduler, not a state machine. It’s plumbing, and you’re still the plumber. # So what is not-MCP?  ## 1. MCP is _not_ an agent brain MCP ferries requests and responses; it does not plan, reason, or chain thoughts. The spec explicitly leaves "agent logic" to higher‑level frameworks ([MCP README](https://github.com/modelcontextprotocol/modelcontextprotocol/blob/main/README.md)). If you're building a SaaS product with embedded agents, you’ll still need planning engines, memory systems, and evaluators to turn context into reasoning. Think of MCP as a walkie-talkie, useful only when you know what to say. ## 2. MCP is _not_ a state machine or workflow runtime When building customer-facing agents that use a remote MCP server, don’t expect the protocol to remember steps, retry failures, or route conditional logic. MCP transmits requests and responses; it does not manage task sequencing, timeouts, or error recovery. If your agent experience spans multiple steps (like triaging, logging, escalating, and reporting), you’ll need to coordinate that logic yourself. Most teams layer on an orchestrator or state machine. custom or off-the-shelf, to manage workflow state across tool calls. MCP won’t do this for you. It’s a message bus, not a playbook. ## 3. MCP is _not_ your security department MCP itself is transport-focused: it defines the envelope, not the vault. The spec doesn’t include built-in support for TLS, RBAC, or audit logging, those responsibilities fall to the server implementation. This means access control, tenant isolation, and secret handling must be layered in by whoever operates the MCP infrastructure. The protocol won't enforce policy boundaries, but it can carry secure tokens or encrypted blobs if your system is built that way. ## 4. MCP is _not_ an ETL, CDC, or bi-directional sync engine MCP streams one request or response at a time. It doesn’t batch reads, diff schemas, or manage pagination across large datasets. It also doesn’t track data lineage, emit change events, or support multi-source conflict resolution. If you're building a customer-facing integration that requires bi-directional sync, where changes flow in both directions with consistency, conflict handling, and auditability, you’ll need robust synchronization infrastructure. MCP is optimized for message exchange, not continuous data sync. Trying to use it for system-wide replication is like using a walkie-talkie to coordinate a satellite upload. ## 5. MCP is _not_ a real-time video or binary transport The current MCP transport specification only supports UTF‑8 encoded JSON over text-based channels like [Server-Sent Events and stdio](https://modelcontextprotocol.io/transports). It does not support binary formats like Protobuf, WebRTC, or raw media streaming. MCP is optimized for structured, token-level context exchange and tool invocation, not for pushing high-throughput media, real-time sensor data, or binary telemetry. If your agent needs to stream video, IoT data, or audio chunks, MCP is the wrong protocol layer for that job. ## 6. MCP is _not_ cost-aware or rate-limit-aware OpenAI’s own [Responses API guide](https://platform.openai.com/docs/api-reference/responses) makes it clear: every tool call made via a remote MCP server is a discrete network request and can incur separate billing events. The protocol has no built-in metadata for pricing, quotas, or throttling logic. MCP won’t prevent an agent from calling a high-cost tool 10,000 times, nor will it warn you about hitting your usage cap. These are responsibilities of the implementer. If you want to track usage, budget constraints, or cost attribution, you’ll need to build that governance yourself outside the protocol. ## 7. MCP is _not_ a universal data model MCP transmits tool inputs and outputs exactly as they’re defined; it does not normalize or transform data schemas. If your customer’s CRM uses `Phone__c` and another uses `WorkPhone`It’s up to your agent to know the difference. MCP won’t validate, align, or infer schema differences across tenants. There’s no mapping layer, metadata registry, or schema introspection in the spec. You’ll need domain-specific logic, adapters, or explicit configuration to ensure your agent understands how to handle the data it receives. ## 8. MCP is _not_ an error-handling framework For agentic apps and customer-facing integrations, graceful failure modes are non-negotiable. But MCP won’t help you there. It forwards tool responses, including vague or malformed errors, exactly as they’re received. There’s no retry logic, error classification, or fallback behavior defined in the protocol. If a downstream API chokes or returns an obscure 500, your agent gets raw JSON and vibes. Customer trust depends on resilient behavior: retries, escalation paths, logging, and user-friendly messages. MCP provides the plumbing to move data, but it’s your job to wrap it with reliability and UX polish. Treat MCP as the transport layer, the resilience layer is still on you. ## 9. MCP is _not_ a consent or governance layer SaaS buyers care about who gets access to what. MCP won’t enforce scopes, approvals, or consent flows. That’s up to your frontend, backend, or agent runtime. MCP will blindly relay any request, risky or not. If you need end-user consent flows or field-level access control, consider layering that on via your own interface (or use Ampersand's robust field mappings and headless UI library) that integrates scoped permissions into the config layer. ## 10. MCP is _not_ an analytics or observability system Want to know which agents are slow in what customer environments? Which customers are error-prone? MCP won’t tell you. It has no telemetry, spans, or structured logs. For a SaaS platform to meet SLAs, you’ll need to log every request around MCP and trace issues yourself. Agentic SaaS teams must build dashboards for visualizing customer-level integration performance. ## 11. MCP is _not_ a versioning or compatibility layer Tools evolve. Fields change. SaaS integrations are long-lived. MCP won’t warn your agent that it’s calling a tool with a deprecated param. If you want graceful degradation, version negotiation, or schema evolution, it’s on your infra. You can enforce those policies with declarative configs or platform layers that validate schema compatibility at deploy time. ## 12. MCP is _not_ a dev environment or simulator Testing agents and tools in development environments is crucial, especially for customer-facing workflows, where incorrect tool calls can impact production data. But MCP doesn’t provide any scaffolding for this. There are no testing flags, dry-run modes, or stubbing conventions. If you want to simulate tool calls, validate payloads, or verify agent reasoning flows without hitting real systems, you’ll need to build your own mocks, simulators, or local test harnesses. Vibe coders take notice! As agent complexity increases, dev/test infrastructure becomes more important and MCP leaves that entirely up to you. ## 13. MCP is _not_ opinionated about tool semantics One call might log a CRM note. Another might delete a Salesforce record. MCP won’t tell you which is safe or dangerous. If you want to warn users, add confirmations, or sandbox certain actions, that logic lives outside the protocol — in your app or agent server. You might annotate tools with metadata like `readOnly`, `dangerous`, or `requiresConsent`, but enforcement has to happen in your infrastructure. # What MCP might solve and what it won't MCP is evolving, and some of today’s missing features could be addressed by future versions of the spec or widely adopted server implementations. Here's what's plausible, and what's not. ## What Might Get Solved Soon ### 1. Structured Error Taxonomies Expect future MCP revisions or conventions to introduce structured error taxonomies, such as `TOOL_TIMEOUT`, `INVALID_INPUT`, or `AUTH_FAILED`. Today, MCP forwards raw tool errors as-is; tomorrow, a common vocabulary may help agents interpret and respond more intelligently. ### 2. Tool Metadata and Versioning Future schemas could include optional fields like `version`, `readonly`, or `requiresConsent`, enabling agents to reason more safely and behave more predictably, especially in regulated or customer-facing environments. This metadata wouldn’t change how tools execute, but it could help orchestrators and agent runtimes make smarter decisions without hardcoding assumptions or duplicating config elsewhere. ### 3. Consent Flags While full auth flows are out of scope, a soft schema flag like `requiresUserApproval: true` could emerge, useful for triggering UI prompts or admin approvals. OpenAI implements this today via its [tool consent dialog](https://platform.openai.com/docs/assistants/quickstart#overview), where developers explicitly approve which tools an assistant can access. A standardized flag for agent-controlled trust boundaries is a natural next step. ### 4. Tracing and Meta Fields Expect conventions (or spec additions) for `trace_id`, `agent_id`, and timestamps to support basic observability. This could become part of the MCP envelope. ## Anthropic? TBD. It’s unclear whether Anthropic will push into the application layer. If Claude-native tool systems or hosted MCP servers emerge, they might introduce consent or governance layers, but nothing in Claude’s current architecture signals strong movement in that direction. For now, the evolution of MCP’s application boundary is more likely to be led by agentic SaaS applicaiton companies and agentic middleware companies like Ampersand. If you want to chat MCP for your SaaS, join our [discord](https://discord.gg/BWP4BpKHvf) or DM me.